To reach to here, we first had to work out the point slope formula and then figure out limits. Derivatives are very powerful. This post was inspired by doing gradient descent on artificial neural networks, but I won’t cover that here. Instead we will focus on the very own definition of a derivative.

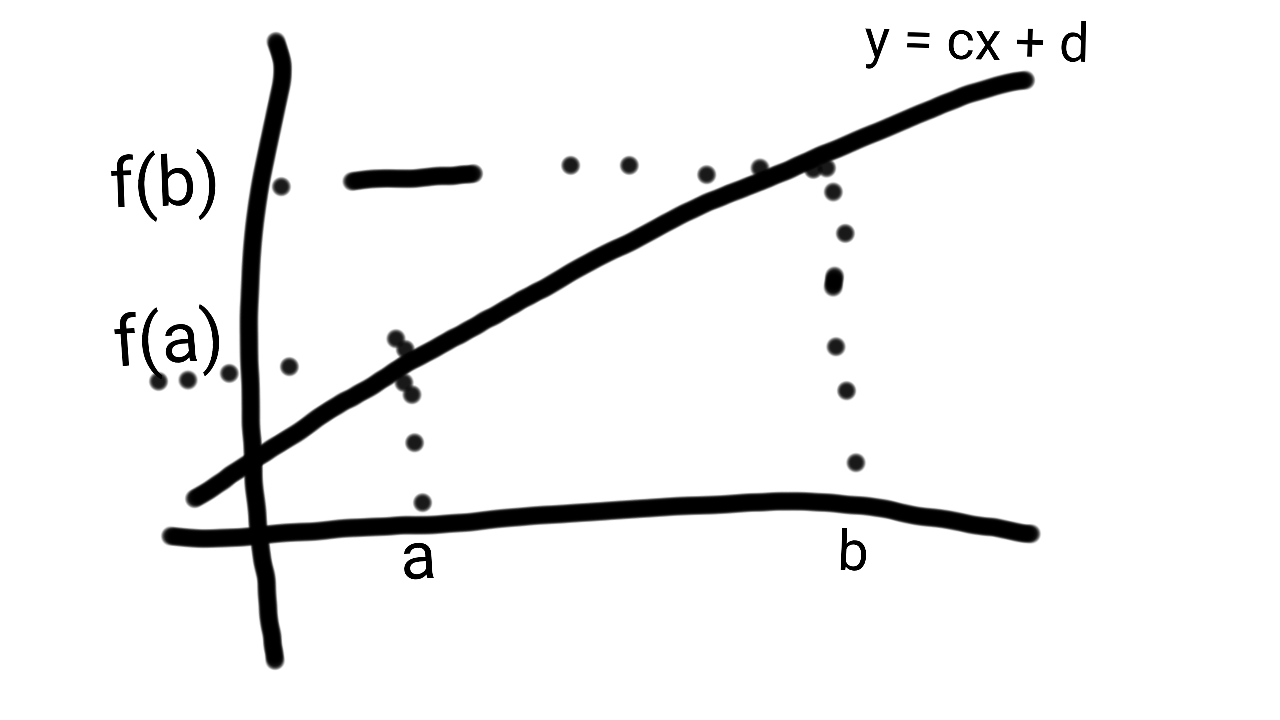

So let’s get started. A secant is a line that goes through 2 points. In the graph below, the points are and

.

To derive a formula for this, we can use the point-slope form of a equation of a line: .

Plugging in the values, we get: .

What is interesting about this formula using the secant is that, as we will see, it provides us with a neat approximation at f(x).

Let’s define . So now we have:

.

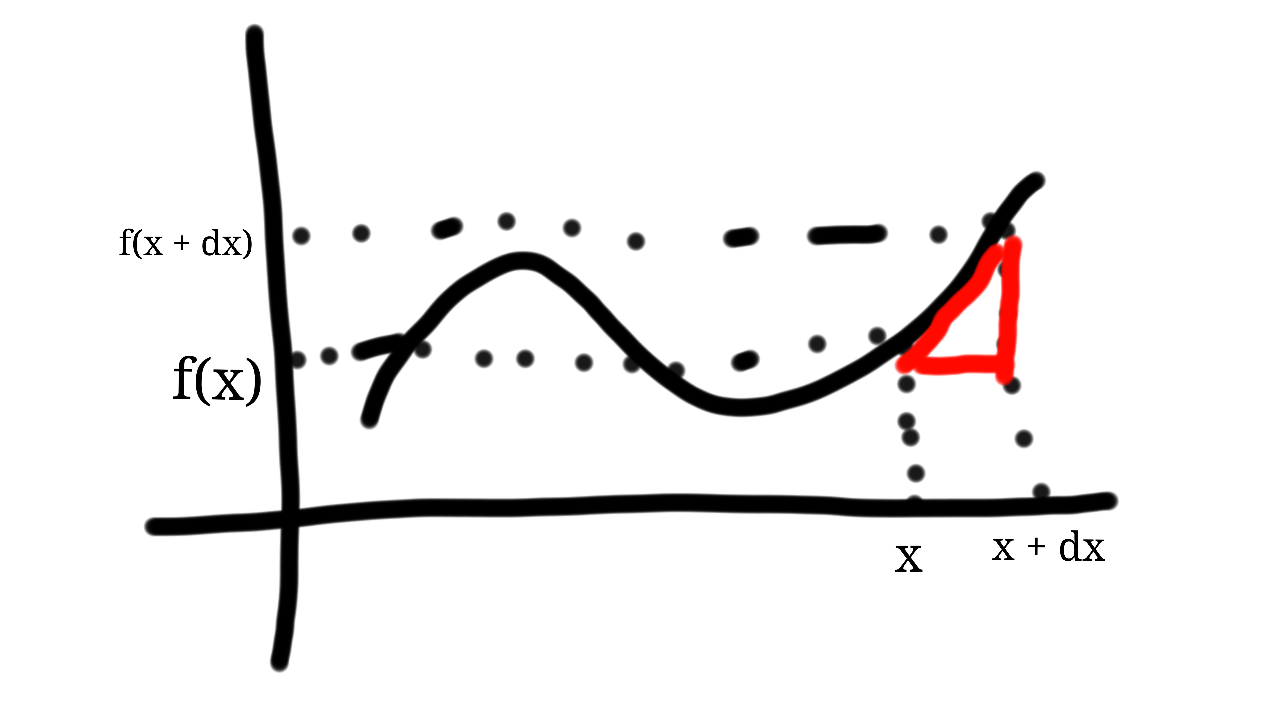

The limit as dx approaches 0 for will give us the actual slope (according to the definition of an equation of a line) at x.

So, let’s define . This slope is actually our definition of a derivative. This definition lies at the heart of calculus.

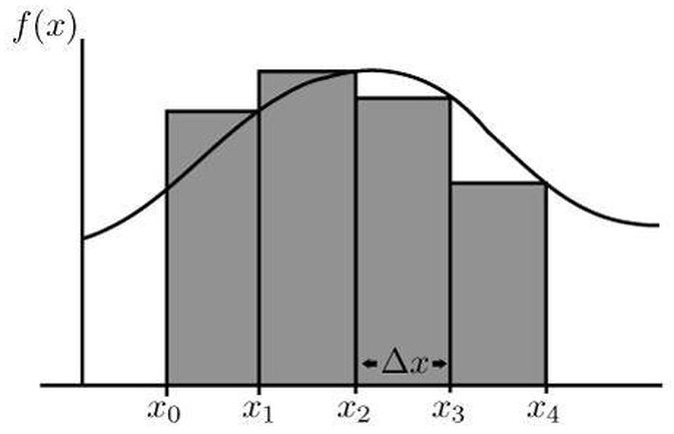

The image below (taken from Wikipedia) demonstrates this for h = dx.

Back to the secant approximation, we now have: . This is an approximation rather than an equivalence because we already calculated the limit for one term but not the rest. As dx -> 0, the approximation -> equivalence.

For example, to calculate the square of 1.5, we let x = 1 and dx = 0.5. Additionally, if then

. So

. That’s an error of just 0.25 for dx = 0.5. Algebra shows for this particular case the error to be dx^2. For dx = 0.1, the error is just 0.01.

Pretty cool, right?

Here are some of the many applications to understand why derivatives are useful:

- We can use the value of the slope to find min/max using gradient descent

- We can determine the rate of change given the slope

- We can find ranges of monotonicity

- We can do neat approximations, as shown

Integrals allow us to calculate the area under a function’s curve. As an example, we’ll calculate the area of the function in the interval

. Recall that the area of a rectangle with size

by

is

. Our approach will be to construct many smaller rectangles and sum their area.

We start with the case – two rectangles. We have the data points

, which give us two rectangles with width and height

and

respectively – note the width is constant because the spaced interval is distributed evenly. To sum the area of this spaced interval, we just sum

. But note that there’s an error, since the rectangles do not cover the whole area. The main idea is the more rectangles, the less the error and the closer we get to the actual value.

Proceed with case . We have the data points

. Since we have four elements, in the range

each element has width of

. The result is

.

Having looked at these cases gives an idea to generalize. First, note the differences of the points in – when

, the difference between any consecutive points in

is 1, and when

, the difference is

. Generalizing for

, the difference between

and

will be

. Also, generalizing the summation gives

, and since we only consider evenly spaced intervals we have

, for all

. This is called a Riemann sum and defines the integral

, where

. Also, since

is the starting point, that gives

.

Going back to the example, to find the interval for , we need to calculate

. From here, we evaluate the inner sum

. Plugging back in gives

.

Now we can take the limit of this as . Note that

so we have

, which finally gives

. This represents the sum of the area

under the curve of the function

.